In today’s digital world, real-time data processing is a game-changer for modern apps. Whether you’re tracking user activity, managing inventory, or keeping an eye on transactions, the ability to respond instantly to data changes can make a huge difference. By using AWS DynamoDB Stream, you can capture these changes as they happen and build event-driven apps that scale effortlessly. In this article, we’ll walk you through how AWS DynamoDB Streams work, explore their key features, and show you how to leverage them for your projects.

Content

What Are AWS DynamoDB Streams?

AWS DynamoDB Streams is a feature that captures a time-ordered sequence of item-level changes in your DynamoDB table. Essentially, this sequence—referred to as a stream—lets you track every PutItem, UpdateItem, and DeleteItem operation. As a result, you get a real-time log of all changes. Moreover, DynamoDB Streams can be enabled on a table-by-table basis, which means you have fine-grained control over which data changes you want to track.

Key Features of DynamoDB Streams:

- Real-Time Data Capture: DynamoDB Streams are great for capturing data changes in real time. Consequently, you can instantly react to any update, insert, or delete operation as it happens.

- Scalability: Additionally, just like DynamoDB tables, DynamoDB Streams automatically scale up to handle large amounts of data without any fuss. Therefore, you don’t have to worry about manual adjustments.

- Seamless Integration: On top of that, DynamoDB Streams work really well with other AWS services like Lambda, Kinesis, and Redshift. As a result, it’s easy to use them for everything from data analytics to real-time processing.

- Event Processing: And here’s another cool thing—DynamoDB Streams lets you build event-driven architectures. Thus, you can set up your system to automatically trigger processes or workflows based on any changes in your data.

Why Use AWS DynamoDB Streams?

AWS DynamoDB Streams offer a powerful way to extend the capabilities of your DynamoDB tables. So, why should you consider using them? Let’s dive into some everyday use cases where DynamoDB Streams can really make a difference:

- Data Replication Across Regions: In today’s global business environment, ensuring that your data is available across multiple regions is crucial. Fortunately, with DynamoDB Streams, you can replicate your data in near real-time across different AWS regions. This approach guarantees high availability and low latency for users all around the world.

- Audit Logging and Compliance: For businesses in highly regulated industries, maintaining a detailed audit log of all changes to critical data is essential. In this case, DynamoDB Streams offers a reliable way to track every modification made to your data. As a result, you can meet compliance requirements and conduct forensic investigations if needed.

- Real-Time Analytics: When immediate insights are necessary—such as for fraud detection or operational monitoring—DynamoDB Streams prove invaluable. By leveraging this feature, you can process and analyze data as it’s updated. Moreover, by feeding stream data into analytics platforms like Amazon Kinesis, you can generate real-time reports and dashboards.

- Triggering Downstream Workflows: Many applications require specific actions to be triggered based on data changes. For example, you might want to send a notification when an order is placed or update a search index when a product’s details change. With DynamoDB Streams, you can automatically and instantly trigger such workflows.

Setting Up AWS DynamoDB Streams

Getting started with DynamoDB Streams is straightforward, and you can set it up in just a few minutes. To begin with, here’s a simple, step-by-step guide to enabling and using DynamoDB Streams:

Step 1: Enable Streams on Your DynamoDB Table

- To get started, log in to the AWS Management Console and head over to the DynamoDB service.

- Select the DynamoDB table where you want to enable streams.

- Click on the “Manage Stream” button in the table’s settings.

- Choose the stream view type that best suits your needs (more on stream view types later).

- Save your changes to enable the stream.

Step 2: Integrate with AWS Lambda

- Create a new Lambda function via the AWS Lambda console.

- Set DynamoDB Streams as the trigger for your Lambda function. This means that every time a change occurs in your DynamoDB table, the Lambda function will be invoked automatically.

- Write the Lambda function code to process the data captured by the stream.

Click this video to get more insight about AWS DynamoDB Streams to Lambda Tutorial in PythonHere’s an expanded example of a Lambda function that processes DynamoDB Streams:

pythonimport json

def lambda_handler(event, context):

# Iterate through each record in the stream event

for record in event['Records']:

# Check the event type (INSERT, MODIFY, REMOVE)

event_type = record['eventName']

# Handle INSERT events

if event_type == 'INSERT':

new_image = record['dynamodb']['NewImage']

print(f"New item added: {json.dumps(new_image)}")

# Handle MODIFY events

elif event_type == 'MODIFY':

old_image = record['dynamodb']['OldImage']

new_image = record['dynamodb']['NewImage']

print(f"Item modified from {json.dumps(old_image)} to {json.dumps(new_image)}")

# Handle REMOVE events

elif event_type == 'REMOVE':

old_image = record['dynamodb']['OldImage']

print(f"Item removed: {json.dumps(old_image)}")

return 'Processing complete'

This Lambda function handles INSERT, MODIFY, and REMOVE events, which means you can keep track of how items in your DynamoDB table change over time.

Click here to get more insight about AWS DynamoDB Pricing: How to Get the Best Deal && DynamoDB vs MongoDB: The Best Database for Automation

Stream View Types in AWS DynamoDB Streams

DynamoDB Streams offer four distinct stream view types, each capturing different aspects of data changes. Understanding these view types is crucial for selecting the one that best fits your application’s needs:

- NEW_IMAGE: This view captures and stores the entire item as it appears after the modification. Therefore, it’s ideal if you’re only interested in the final state of the data.

- OLD_IMAGE: This view captures the state of the item before the modification. As a result, it’s perfect for audit logging or situations where you need to track what was deleted or overwritten.

- NEW_AND_OLD_IMAGES: This view captures both the before and after images of the item. Consequently, it’s beneficial for tracking changes over time and maintaining a complete history of data modifications.

- KEYS_ONLY: This view captures only the primary key attributes of the modified item. Thus, it’s a lightweight option when you don’t need the total item but still want to track which records were altered.

By carefully choosing the right stream view type, you can strike the right balance between the amount of data captured and your application’s specific requirements.

Advanced Technical Solutions with AWS DynamoDB Streams

DynamoDB Streams offers several advanced features that allow you to build sophisticated data processing workflows. So, Let’s dive deeper into some of these features:

1. Time to Live (TTL) with DynamoDB Streams

Firstly, Time to Live (TTL) is a DynamoDB feature that automatically deletes expired items from your table. When combined with DynamoDB Streams, TTL can be used to track when items are deleted, allowing you to respond to these deletions in real time. This capability is handy for applications that need to maintain up-to-date caches or enforce data retention policies.

2. Error Handling and Retries

Moreover, in production environments, it’s crucial to handle errors gracefully. For instance, when processing DynamoDB Streams with Lambda, you can implement retries to handle transient failures. In addition, using a Dead-Letter Queue (DLQ) allows you to capture and investigate events that fail processing after multiple attempts. By monitoring these failures through CloudWatch, you gain visibility into your stream processing pipeline.

3. Security Best Practices

Furthermore, security is paramount when dealing with data streams. So make sure AWS Identity and Access Management (IAM) roles are always used to control access to DynamoDB Streams. Additionally, make sure that your Lambda functions have the minimum necessary permissions to operate. It would help if you also considered encrypting your streams using AWS Key Management Service (KMS) to protect sensitive data.

4. Scaling Considerations

Finally, DynamoDB Streams scale automatically with your table. However, if your application requires processing a high volume of events, ensure that your Lambda functions are optimized for parallel execution. You can also use Kinesis or SQS as an intermediary to manage high throughput and distribute the workload across multiple consumers.

Advanced Use Cases

DynamoDB Streams are versatile and can be applied in a variety of advanced use cases that extend beyond simple data tracking. Let’s explore some examples:

- Building a Real-Time ETL Pipeline

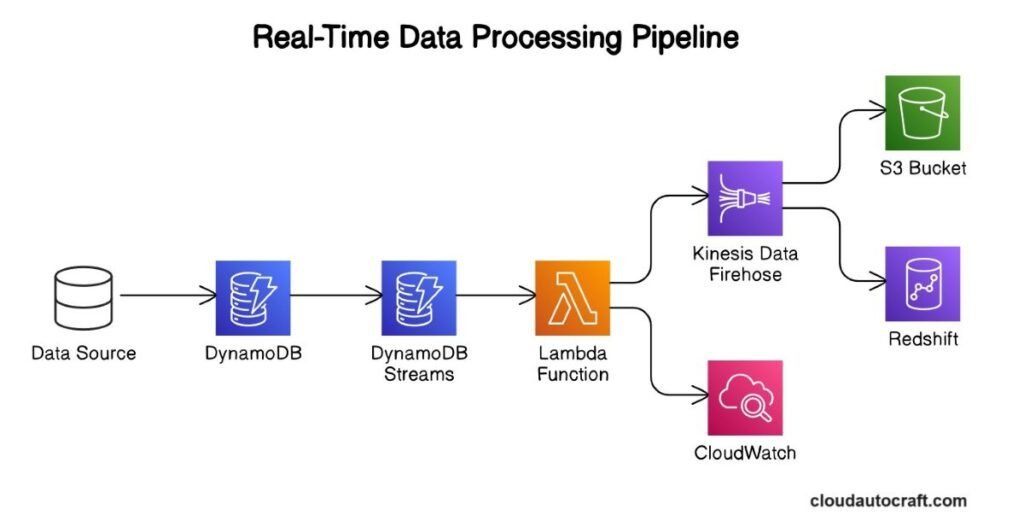

ETL (Extract, Transform, Load) processes are commonly used to move data from one system to another, often transforming it along the way. By leveraging DynamoDB Streams, you can build a real-time ETL pipeline where changes to your DynamoDB table are automatically captured, transformed, and loaded into another system, such as Amazon Redshift or S3.

- Cross-Region Replication for Disaster Recovery

Moreover, in applications requiring high availability and disaster recovery, cross-region replication ensures that your data is always available. For this purpose, by using DynamoDB Streams in combination with AWS Lambda, you can automatically replicate changes made in one region to another region. As a result, you minimize downtime and data loss in the event of a regional failure.

- Building Event-Driven Microservices

Furthermore, in a microservices architecture, services often need to respond to changes in data. With DynamoDB Streams, you can build an event-driven architecture where microservices react to changes in shared data, enabling more responsive and decoupled systems. For example, updating a search index or sending notifications based on data changes becomes seamless with DynamoDB Streams.

Best Practices for Using AWS DynamoDB Streams

To make the most out of DynamoDB Streams, here are some friendly tips to boost performance, security, and reliability:

- Keep an Eye on Stream Activity: Use AWS CloudWatch to track how your DynamoDB Streams are doing. This way, you can make sure your Lambda functions are handling events in a timely manner.

- Streamline Lambda Functions: Make your Lambda functions simple and focused on one task at a time. Consequently, this helps them run smoothly and makes it easier to fix issues if they come up.

- Handle Errors Smartly: Always add error handling and retry logic in your Lambda functions. This way, temporary problems won’t lead to data loss.

- Be Mindful of Permissions: Use IAM roles with the least amount of access necessary. So, this means your Lambda functions and other services only get the permissions they absolutely need.

- Plan for Growth: Make sure your DynamoDB Streams can keep up as your app grows. You should use multiple Lambda functions, split data into shards, or connect with other AWS services like Kinesis.

By following these steps, you’ll keep things running smoothly and securely as you scale up!

Conclusion

AWS DynamoDB Streams give you a fantastic way to handle real-time data right from your DynamoDB tables. Whether you’re diving into real-time analytics, keeping things compliant, or automating workflows, DynamoDB Streams has got you covered with flexibility, scalability, and security.

However, it’s crucial to grasp their key features, explore advanced solutions, and follow best practices. As you weave DynamoDB Streams into your system, keep on monitor on performance, fine-tune your Lambda functions, and plan for growth to ensure everything runs smoothly.

Click here for more insights on various Cloud Computing && Cloud-Security article.

FAQs:

How do AWS DynamoDB Streams differ from Amazon Kinesis?

Answer: DynamoDB Streams and Amazon Kinesis both handle real-time data, but they serve different needs. Specifically, DynamoDB Streams focuses on tracking changes within DynamoDB tables by capturing updates, inserts, and deletes. In contrast, Amazon Kinesis is a general-purpose platform designed to process large volumes of data from a variety of sources. Therefore, DynamoDB Streams can be used to manage table-specific changes while leveraging Kinesis for broader data processing needs.

What are the pricing details associated for DynamoDB Streams?

Answer: DynamoDB Streams are charged based on the number of read request units (RRUs) used to process stream records. Your costs will vary depending on how much data you’re handling and how many operations you perform. If you’re using AWS Lambda to process the stream data, you’ll also face Lambda execution costs, which are based on the number of invocations and their duration.

How do I maintain data consistency with DynamoDB Streams across regions?

Answer: To ensure consistent cross-region replication, utilize DynamoDB Global Tables for automatic and reliable data replication. Additionally, if you are using DynamoDB Streams in conjunction with Lambda, it is crucial to ensure idempotency to manage retries effectively. Moreover, sequence numbers or timestamps can be implemented to apply updates in the correct order.

Can DynamoDB Streams handle large-scale real-time analytics workloads?

Answer: DynamoDB Streams can support real-time analytics; however, their scalability depends significantly on your system design. Therefore, for large-scale workloads, it is advisable to pair DynamoDB Streams with Amazon Kinesis Data Streams. Kinesis, on the other hand, is designed to handle large data volumes efficiently. It can buffer and process these volumes before sending them to analytics platforms such as Amazon Redshift or Amazon S3. Consequently, this combination helps you scale your analytics pipeline effectively.

Originally posted 2024-08-23 15:20:59.